[Linkpost] “What if we just…didn’t build AGI? An Argument Against Inevitability” by Nate Sharpe

Description

Note: During my final review of this post I came across a whole slew of posts on LessWrong and this forum from several years ago saying many of the same things. While much of this may be rehashing existing arguments, I think the fact that it's still not part of the general discussions means it's worth bringing up again. Full list of similar articles at the end of the post, and I'm always interested in major things I'm getting wrong or key sources I'm missing.

After a 12-week course on AI Safety, I can't shake a nagging thought: there's an obvious (though not easy) solution to the existential risks of AGI.

It's treated as axiomatic in certain circles that Artificial General Intelligence is coming. Discussions focus on when, how disruptive it'll be, and whether we can align it. The default stance is either "We'll [...]

---

Outline:

(00:14 ) en-US-AvaMultilingualNeural__ Cartoon figure and small robot contemplating What if we just didnt build AGI?

(03:25 ) 1. Why The Relentless Drive Towards The Precipice?

(07:23 ) 2. Surveying The Utopian Blueprints (And Noticing The Cracks)

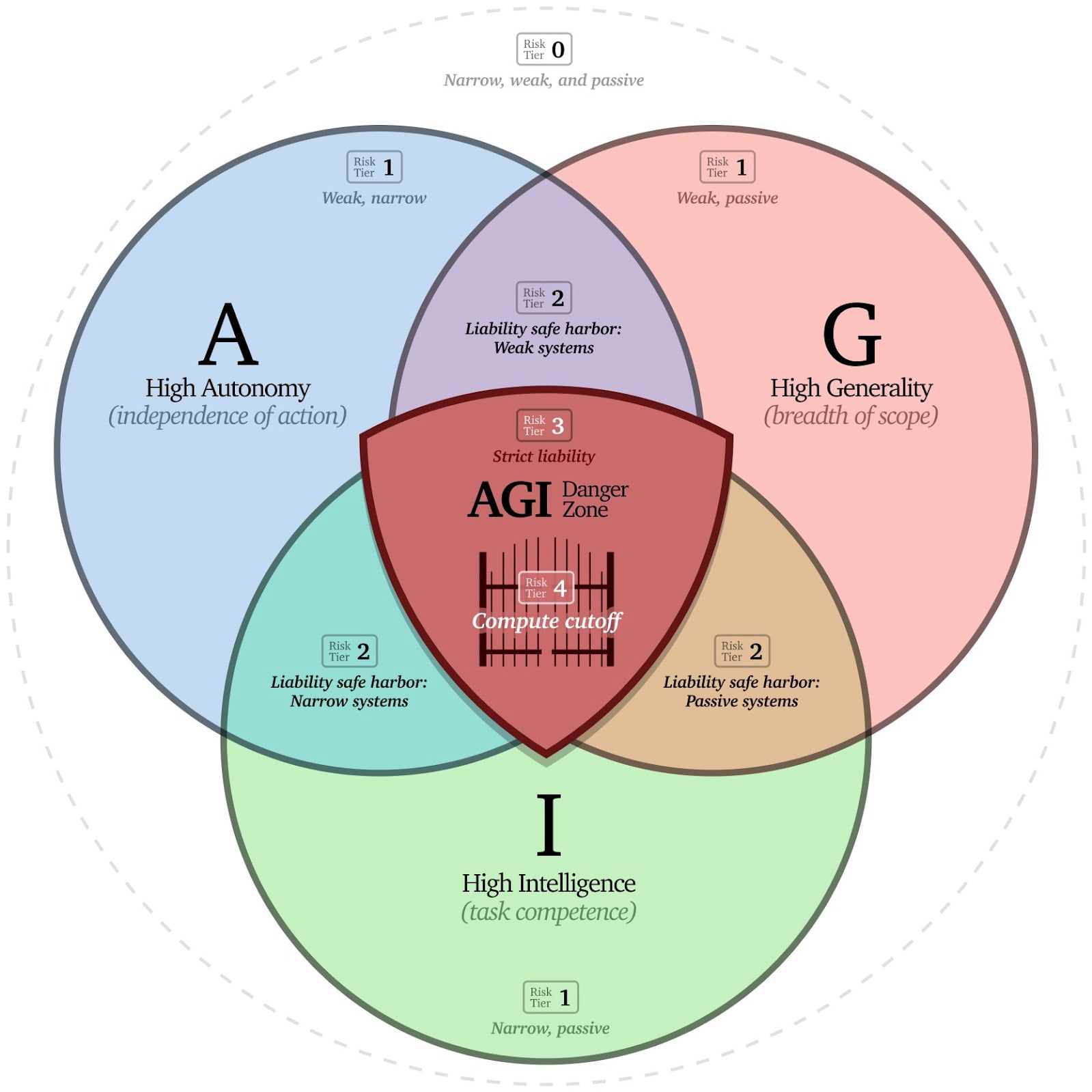

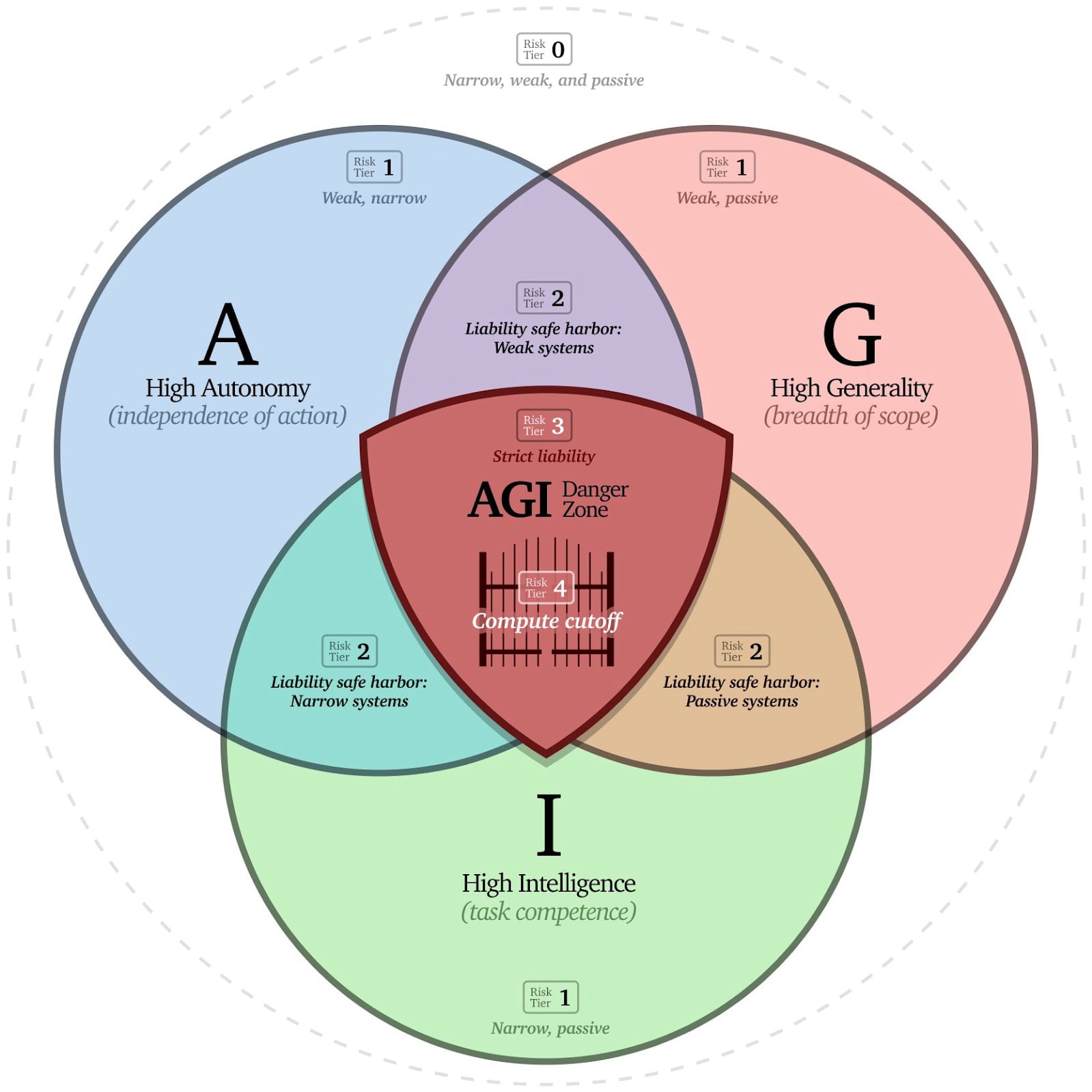

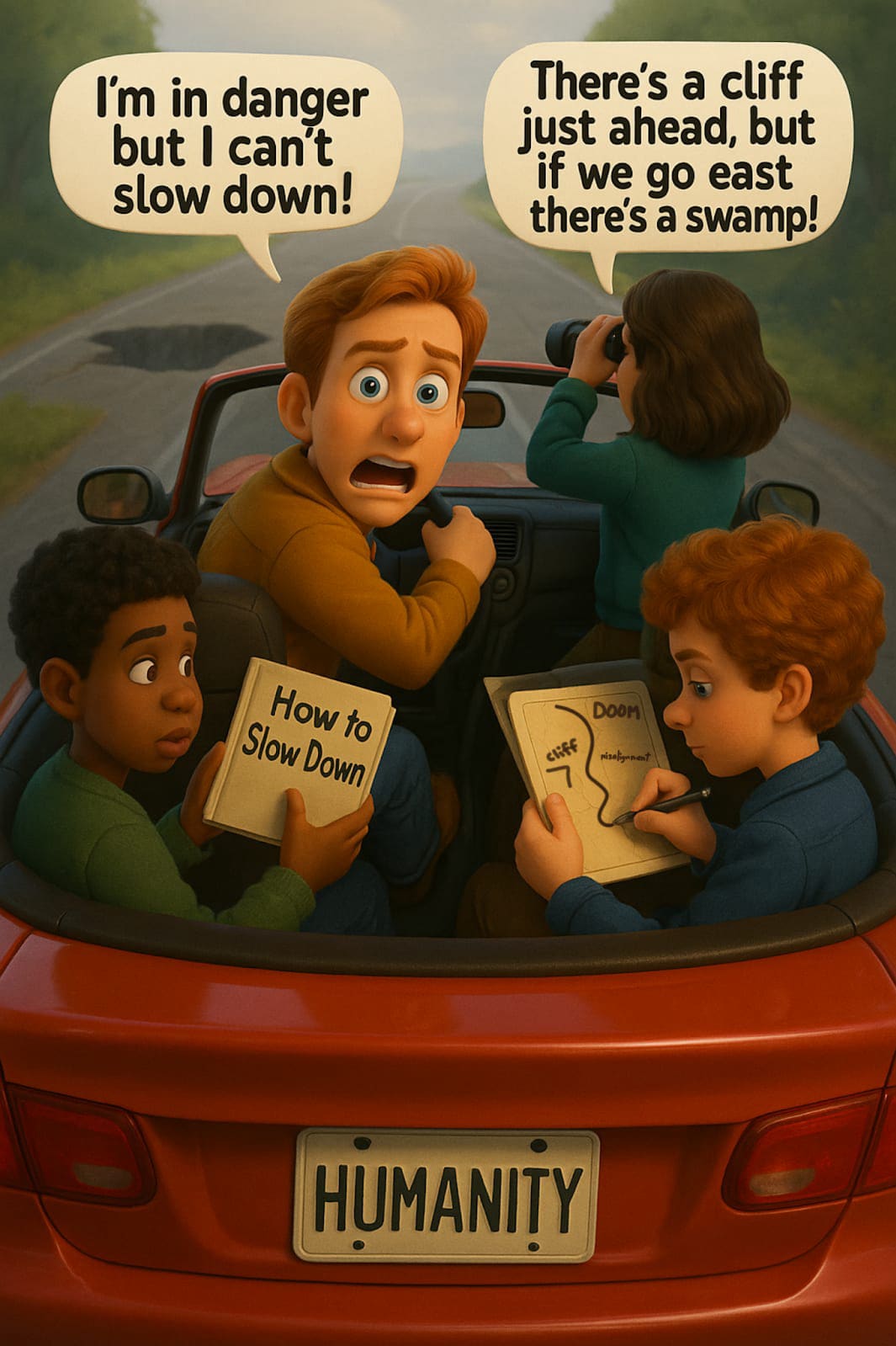

(09:44 ) 3. Why AGI Might Be Bad (Abridged Edition)

(14:23 ) 4. Existence Proofs For Restraint: Sometimes, We Can Just Say No

(18:46 ) 5. So, Whats The Alternative Path?

(27:19 ) 6. Addressing The Inevitable Objections

(30:35 ) 7. Conclusion: Choosing Not To Roll The Dice

(34:08 ) The Problem of Aligned To Whom?

(36:25 ) The Target is Moving

(41:57 ) Why Do People Want AGI?

(47:21 ) Solving Alignment is a Category Mistake

(53:23 ) Prior Examples of Coordinated Tech Avoidance

(57:04 ) So What Can We Do?

(01:04:47 ) Brainstorm & Ideation

(01:04:50 ) Potential Topics

(01:07:02 ) Topic Scoring

(01:07:22 ) Selected Concept

(01:07:30 ) Audience & Goals

(01:07:33 ) Target Audience

(01:07:45 ) Audience Benefit

(01:07:59 ) Format & Outline

(01:08:01 ) Deliverable Format

(01:08:08 ) Initial Outline/Storyboard

(01:08:32 ) Sources/Thoughts

---

First published:

May 10th, 2025

Linkpost URL:

https://natezsharpe.substack.com/p/what-if-we-justdidnt-build-agi

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

![[Linkpost] “What if we just…didn’t build AGI? An Argument Against Inevitability” by Nate Sharpe [Linkpost] “What if we just…didn’t build AGI? An Argument Against Inevitability” by Nate Sharpe](https://is1-ssl.mzstatic.com/image/thumb/Podcasts126/v4/f7/3f/12/f73f1299-dc7f-f763-5dec-60470a421548/mza_285343761639087474.jpg/400x400bb.jpg)